I have been working with technology long enough to think twice before calling a new tool the thing that will change humans forever. In a sense, all tools do that, we are evolving constantly, and are never the same. But in reality, there are so many technologies that come and go, that look amazing before being quickly forgotten, that I always stay on the cautious side, even when talking about AI.

Artificial Intelligence, the marketing name given to Generative AIs, or Large Language Models, was one of those tools. The technique has been studied for some time, but it was last year, when the first commercial applications were released to the public, that everything changed. The method that was good to formulate believable responses was transformed into a god, whatever it said was true, and there was no space for questioning or the option to not use it. We have seen every possible type of application using AI, from editing photos and writing essays (not this one) to chatbots that simulate celebrities (I will rant about that in a future post in this blog).

To better approach this subject, I will break it into three parts. First let’s discuss how LLMs work (very high level, no mathematical detail, you can ask ChatGPT about that). Later, we can understand why AI is a term that means so much more than what we have today and how this serves as marketing. Finally, why some of the fears and hopes we have today are actually kind of old.

Behind the curtain

The whole idea of an AI is that it is trained in a lot of data to learn what combinations of words is more probable to show up in a sentence. Imagine that you have to guess a word, knowing that starts with N, and there are only two letters: N_. Normally, you would guess the word No, but it also could be the acronym NY, for New York. Your brain chooses to guess No, instead of NY, because you have seen ‘No’ appear in way more sentences than NY, it is more probable. That’s the high-level concept being the LLMs, the difference is that they scale up, instead of trying to figure out what the next letter could be, it tries to guess what the next words can be: in a message, after ‘Hello’, it is more probable that someone will say ‘how are you?’ than ‘car saxophone drink’. And instead of having my 29-year-old brain as all the knowledge to be a good guesser, it has a billion internet articles, books, messages, and all types of copyright content (yes, piracy, but they don’t like this word).

Similar to the way our brain works, the AIs also use a lot of context to know what to do. If you ask it to write an email, a poem, or a book, it will behave differently, it will give answers that are more probable to happen in those contexts, and it will use knowledge from emails, poems, and books it has seen before to give you the answer.

Knowing that the goal of an AI is to choose what is more probable, it becomes clear that the answer it gives to any question is a believable one, something that could exist in the real world, but not necessarily something correct, something that does exist. Not because something sounds right, it is right. And at this point is when we have to understand that ChatGPT or Bard or Bing, do not hallucinate, the goal is to sound plausible, not to be correct, so the hallucinations, the facts it invents, are just a way to make it sound true. Of course, there are a bunch of other methods that are being applied to reduce the hallucinations and make the whole thing more trustful, but at the end of the day, it is not that easy. In other words, the problem is not the AIs, but our expectations, the marketing that says they will do something they won’t (at least not in the present, or near future).

The big umbrella

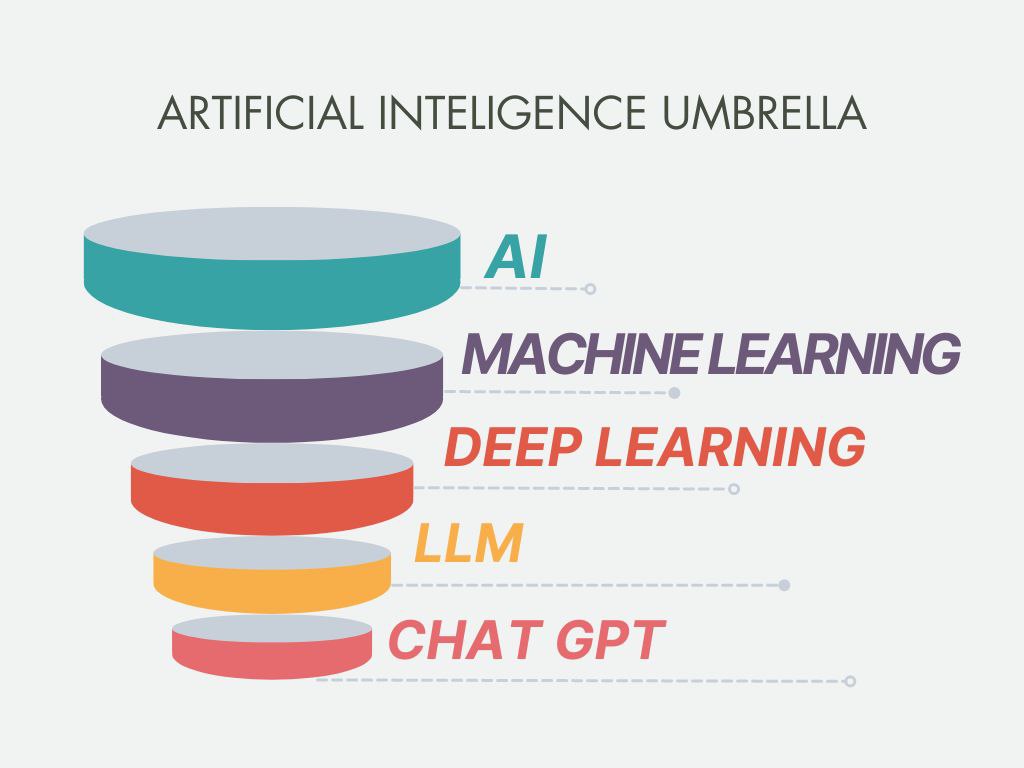

Now that we are in the same boat, the one that says “Don’t believe everything an ad or company says, marketing can be pretty, pretty terrible for you to know the truth”, it is time for you to know why not all AIs are the same. Artificial Intelligence is a term created in 1955, and it was a field to be researched, a laboratory of ideas, not a thing, but a concept, the idea that learning was a series of well-defined steps and that a machine could simulate that. This means that any machine that performs some type of intelligence, learns to solve a problem or more simply: makes decisions, can be called an AI. It’s as simple as that. The diagram below shows how deep a ChatGPT (and similar) model is inside the umbrella that is AI.

Okay, but what is the problem with calling it AI? It is an easier term, than having to explain all of it to anyone else. Yes, that’s right, the problem is that even though LLMs are AI, not all AI is an LLM. So when you see a product saying “We are using AI”, it can be almost anything. Maybe they are using a really “smart” chatbot, or they have an algorithm from 1990 that depending on the time of the day says “Have you fed your Tamagotchi?”. Either way, it will look more appealing to a user if they are buying an app that has AI, than an outdated one (that does the same thing but without AI in the name).

Old fears and hopes

We, humans, love the concept of perfection. We want a fair society, equal to everyone, where no mistakes are committed, that is way different from what we have today, but at the same time we fear any type of change, we desire routine, and steadiness, and to keep our privileges even though this means others will still be in worst conditions (oh what a delight is to think about us). Technology comes as something perfect, strict, driven by numbers that never lie, and at the same time something we can control, something we can shut off if we don’t like where it is going (or we wish that was true).

In 1966 it was developed an artificial psychologist called Eliza. A simple, program that repeated anything you said in a question format. If said something like “I am feeling sad today”, it would answer “Why are you feeling sad today?”, not shenanigans, nothing too fancy, but enough for people to love it, think it is useful, and even more: trust it with all of their private thoughts. And this is a good example of how we tend to believe and trust more computers than humans. We are afraid our human therapist may tell someone what we told them, or that a dietician will judge the way we eat, or that our professors will try to force their ideology on us (🤦), but not an app. Computers do not have those human flaws, they are perfect, so we trust them (selling our data for advertising is something we can talk about another time).

We have been trusting blindly computers since Eliza, and even before that, so ChatGPT and other LLMs have received the same treatment. We ask them to summarize books, and articles, to explain hard subjects, to write emails, to generate images, and we just trust they are perfect, and won’t make mistakes. Some people may dream about the day that ChatGPT will drive their car or operate a surgery robot. And I hope that by this part of the post you are also on the cautious side with me.

As for the fears, well, humans have feared that radios would discourage people from reading, and make everyone an alphabet, that TVs would make everyone lazy, without the ability to interpret text (they are not entirely wrong), and that a bunch of other technologies would be the end of our species (even though we’re giving our best to extinct ourselves, we are not there yet). The fear of change is clear and rational. But in the same way that the TV may have reduced the number of jobs in the radio or the printing press, there were a bunch of new jobs created. AIs will reduce the need for a bunch of repetitive jobs, but they will probably create new needs. There is no one solution that fits all, there is no AI that can do everything.

Even more, with AIs doing so much, and poorly, there is a growing space for human-made things. It may be fun to ask an AI to paint your family, but it gives way more importance to have a painter do the same (and way better). In the same way that fast foods or processed store-bought have their place in the routine, day-to-day confusion that we call life, but are not regularly used to celebrate special events or moments, being swapped by restaurants or food cooked by relatives.

Let’s come down, take a breath, cook some food and remember that AIs are a tool, not a god.